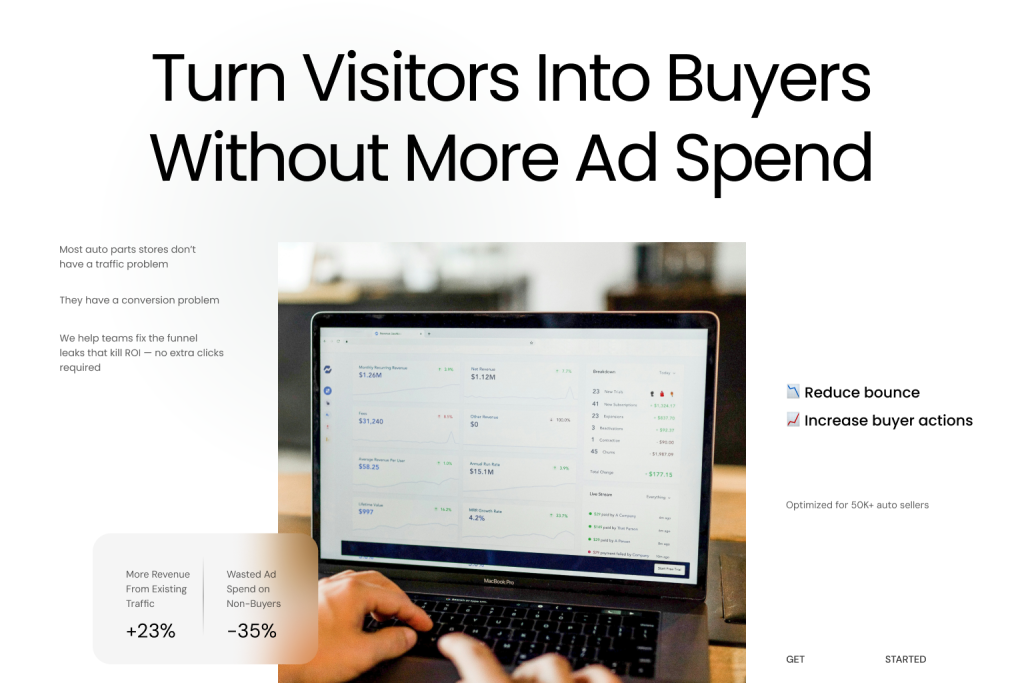

Split testing, also known as A/B testing, compares two versions of a web page to identify which one performs better. It’s key for improving user engagement and conversions. This article covers everything from the benefits to techniques and tips for successful split tests. By using split testing tools, businesses can collect relevant data, analyze test results, and identify areas for improvement by considering all the elements that can be tested.

Key Takeaways

- Split testing, or A/B testing, allows marketers to compare different web page versions to improve user engagement, conversion rates, and overall site effectiveness.

- Key steps in conducting a split test include defining clear objectives, formulating a focused hypothesis, calculating an adequate sample size, and creating effective variations for testing.

- Knowing the baseline conversion rate is crucial for calculating the sample size needed for reliable split test results.

- Common pitfalls to avoid in split testing include lacking a defined hypothesis, insufficient user traffic, and testing too many variables simultaneously, which can lead to unreliable results.

Introduction to Split Testing

Split testing, also known as A/B testing, is a method of conducting controlled, randomized experiments to improve website metrics and overall business revenue. It involves creating two versions of a web page, email, or digital asset, and comparing their performance to determine which one drives better results. The primary objective of split testing is to increase conversions and overall business revenue gains by making data-driven decisions. By using split testing tools, businesses can collect relevant data, analyze test results, and identify areas for improvement. Split testing is essential in digital marketing, as it allows marketers to test hypotheses, measure the impact of different versions, and determine which elements have a significant impact on user behavior.

Understanding Split Testing

A/B testing, often referred to as split testing, involves assessing two distinct versions of a web page in order to ascertain which one yields superior results. The primary objective is the examination of how modifications on a web page can influence user actions, such as elevating conversion rates or enhancing customer satisfaction. By trialing different components on the webpage, marketers are able to collect data and gather definitive and actionable insights regarding what resonates most effectively with their target demographic.

One significant benefit of utilizing split testing is its capacity for optimizing web pages so that they better cater to users’ needs and foster deeper engagement. This method provides an efficient way for trying out varied marketing approaches directly within the marketplace context, making it an indispensable asset when seeking ways to optimize online platforms without excessive expenditure or wasted effort.

Employing A/B tests constitutes a systematic and economical technique for appraising the success level of website elements. It equips digital marketers with evidence-based guidance enabling them to refine their websites progressively. Utilization of appropriate split testing tools facilitates pinpointing alterations that lead to impactful outcomes. These learnings can be transferred into forthcoming initiatives confidently based on empirical results rather than conjecture.

Key Benefits of Split Testing

Split testing serves the fundamental goal of elevating crucial website metrics, such as conversion or click-through rates. By employing a strategy centered around user-driven choices, marketers can refine their campaigns by leveraging actual data on how users interact with their content. The reliance on this data-centric methodology is essential for amplifying conversions and propelling sales forward based on the data collected from split tests.

Executing an effective split test can yield substantial enhancements in key business indicators. For example, through diligent application of split testing, Zalora witnessed a 12.3% uplift in their e-commerce checkout rate. In parallel contexts like email marketing, split tests have been shown to augment open rates and bolster conversion ratios – offering profound clarity into consumer inclinations.

The practice of continuous testing offers deeper insights into what consumers prefer and illuminates diverse customer groups’ behaviors uniquely – supporting businesses in surpassing competitors while facilitating informed decision-making that beneficially influences prime metrics such as bounce rates, active users count, and overall user engagement levels.

Essential Steps for Conducting a Split Test

To carry out an effective split test, one must adopt a methodical strategy. It is essential to initially establish the aims of your experiment and pinpoint what you aspire to accomplish through the testing process, considering different segments of your audience. A hypothesis can then be crafted in alignment with these targets and existing performance metrics, concentrating on a singular variable to precisely assess its effect.

Ensuring that your sample size is adequately calculated stands as another vital step towards attaining statistical significance. Delving into extensive research and having a solid grasp of how your current system performs are fundamental for determining the needed sample size that will produce trustworthy outcomes. Once these critical components are secured, you can proceed to develop robust variations for your test and commence with executing the split test.

Read more in our previous article:

Understanding AB Testing vs Hypothesis Testing: Key Differences Explained

Identify the Objective

Begin the process of spotting areas for split test enhancement by delving into information from web analytics services such as Adobe or Google Analytics, and consider testing email subject lines to improve engagement. Keep an eye on essential indicators including bounce rate and session length averages to identify pages that aren’t performing well. Focus your testing efforts on pages that either attract a large number of website visitors or suffer from poor conversion rates.

It’s imperative to collect feedback from your audience to uncover points where they may encounter confusion or obstacles along their user journey. Employing resources like heat mapping in conjunction with analytical tools can highlight potential improvements on a web page. This approach, grounded in data, guarantees that your split tests target elements with significant impact, thus fostering more successful outcomes.

Formulate a Hypothesis

Crafting a hypothesis is pivotal when undertaking split testing. It’s crucial to ensure that equal traffic is directed to each test version to obtain reliable results. This should be grounded in the analysis of data and user behavior insights. To precisely measure the influence of changes, it’s crucial to isolate and test only one element at any given time. An impact matrix can help organize your hypotheses by sorting them into four categories, considering their potential effect, how confident you are in them, and their ease of execution.

Your hypothesis needs to target enhanced outcomes informed by understanding your audience’s interactions and content efficacy – focusing on adjustments that could lead to key metric improvements such as reducing bounce rates should take precedence.

By conducting tests in this manner, valuable knowledge is accumulated which will guide subsequent experiments designed to refine web pages – an exercise that effectively marries an optimization method with structured experimentation using pertinent data.

Calculate Sample Size

To obtain reliable outcomes from a split test, it’s crucial to attain statistical significance, which involves knowing how much traffic is needed for your experiment. To determine the amount of traffic needed for your experiment, calculate the essential sample size. For results considered statistically significant, you should witness no fewer than 200 conversions.

To the Frequentist method, employing a Bayesian model can yield reliable results with fewer site visitors. Strive for an observable effect size minimum of 20% so as to have assurance that any changes exceeding this threshold are attributable to the alterations implemented in your test.

Accurately determining the necessary sample size enhances the trustworthiness of your split test findings and allows you to make choices grounded on empirical evidence gathered during testing.

Creating Effective Test Variations

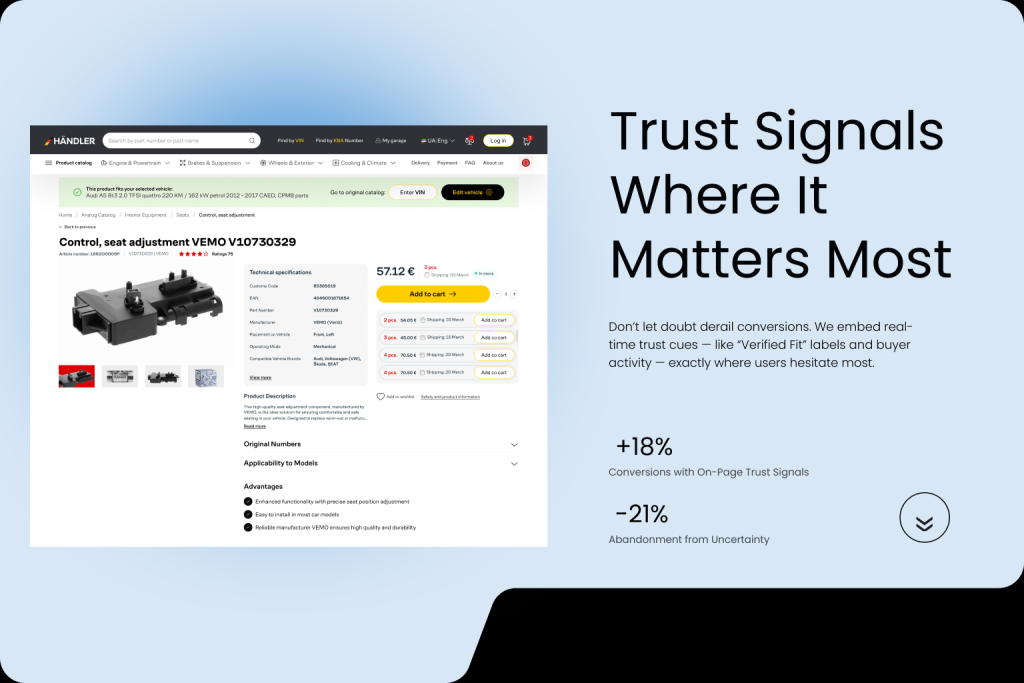

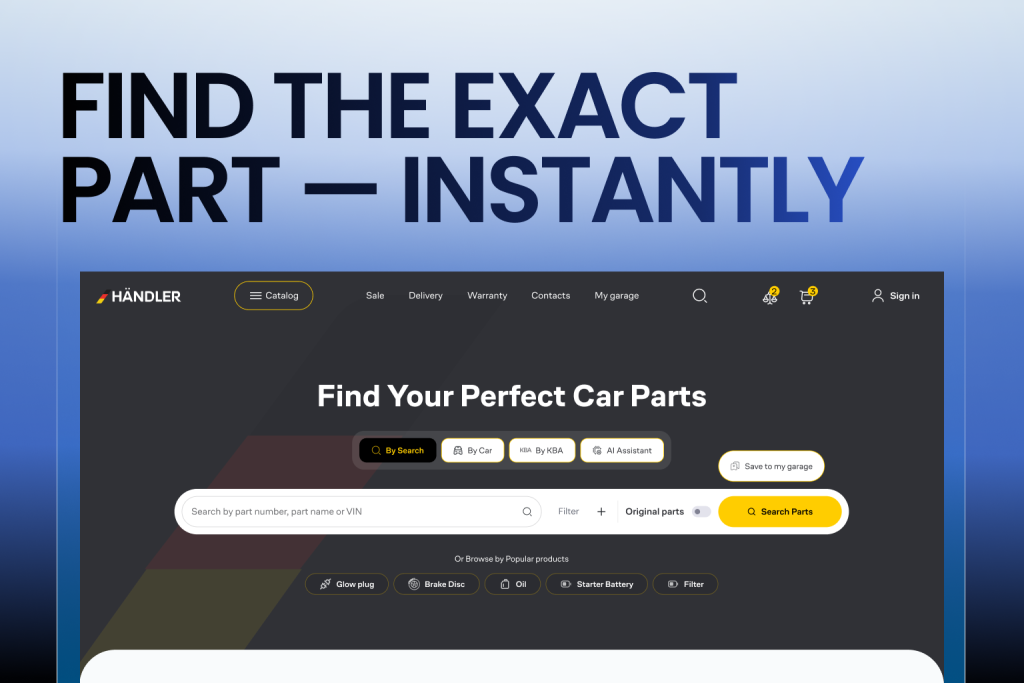

In the realm of digital marketing, conducting split tests with strategically crafted test variations is essential. It’s important to consider the image source for visuals used in these tests. Utilizing analytics and heat mapping tools can help identify where your website may benefit from improvements. When undertaking such optimizations, prioritize significant alterations over trivial tweaks to conserve resources efficiently. For instance, experimenting with diverse headline styles – from the direct to the imaginative – can provide insights into what captures your target audience’s attention most effectively.

To enhance user engagement and foster a stronger connection with your audience, tailor the tone and style of your content accordingly. The format of calls to action also holds considerable sway over user involvement and conversion rates. It’s worth testing different versions in this regard. Likewise, assess various types of visual elements that could be instrumental in bolstering conversions throughout your marketing campaigns.

Designing multiple variants for split testing entails considering how each element will resonate with your target demographic’s preferences and requirements. Essentially each variant represents a nearly identical iteration of an existing page but incorporates several distinct modifications for comparison purposes, allowing you to determine which changes yield enhanced performance data when tested alongside its original counterpart. This meticulous approach ensures that every new version leads to more effective test variations capable of generating meaningful results across numerous adaptations within any given campaign.

Running and Monitoring Your Split Test

Prior to launching a split test, it is crucial to meticulously inspect all elements involved in order to prevent any errors. It’s important that during the testing phase, traffic of equal volumes originates from an identical source, utilizing existing traffic to guarantee consistency in the findings. The time at which visitors arrive can have a profound impact on conversion rates because user responsiveness fluctuates throughout different days.

It’s imperative that you allocate adequate duration to your tests so they can gather enough data for reliable outcomes, thus avoiding hasty judgments based on incomplete information. Halting a split test too soon could cause unreliable results stemming from inadequate data accumulation. Refrain from making changes mid-test as this could result in skewed conclusions.

Adhering strictly to these recommended practices facilitates efficient execution and supervision of your split tests, culminating in precise and practical insights.

Analyzing Split Test Results

Attaining statistical significance in split tests provides the assurance that the outcomes are not merely coincidental. These insights can inform future experiments. Strive to achieve at least a 95% level of confidence in reaching statistical significance for making informed business decisions guided by split test findings. Essential metrics to assess should encompass primary indicators such as conversion rates, click-through rates, and revenue generation.

Upon completing a split test, it’s important to evaluate any increases in key metrics to ascertain success levels. Keeping detailed records of each experiment’s results can improve strategic decision-making processes going forward. In instances where a split test fails to produce an unequivocal winner, delve deeper into the data for useful trends or insights regarding audience behaviors.

In cases where a split test underperforms, harnessing its data becomes crucial for gathering information that will refine subsequent experiments. Detailed examination of your split test results is vital for enabling choices rooted in analytics aimed at perfecting web page performance.

Common Mistakes to Avoid in Split Testing

A significant mistake in split testing is the absence of a clear hypothesis, which can cause attention to be diverted towards non-essential metrics. Conducting tests with inadequate user traffic may produce untrustworthy outcomes that do not reach the threshold for statistical significance, making it crucial to consider website traffic in your planning. Ignoring mobile users during this process could distort findings, considering that they constitute over half of global web traffic.

Simultaneously examining too many variables might muddle results and render it difficult to pinpoint exactly which alteration caused an observed effect due to sheer randomness. Concluding multivariate testing too early without obtaining sufficient statistical significance may result in erroneous interpretations about how well something performed within a controlled experiment, particularly if proper statistical analysis has been neglected.

Steering clear of these prevalent errors will enhance the efficacy and dependability of split-testing results.

Best Practices for Successful Split Testing

Successfully conducting a split test hinges on three essential components: devising a solid hypothesis, determining the right sample size, and employing an accurate split-testing tool. Utilizing a split testing methodology facilitates data-driven decision-making in website design and marketing strategies. Such testing is crucial for marketers because it allows decisions to be grounded in real user behavior rather than mere speculation.

Split testing plays a pivotal role in improving the user experience as it pinpoints which design features and various layouts resonate most with users. By focusing on key elements of split testing early on, potential pitfalls associated with product modifications are minimized and perpetual enhancement is promoted. Adhering to these established guidelines guarantees that your tests are reliable and provide valuable conclusions.

Advanced Split Testing Techniques

Advanced split testing techniques involve using multivariate testing to test multiple elements simultaneously. This approach allows businesses to test various combinations of elements, such as headlines, images, and calls-to-action, to determine which combination performs best. Additionally, businesses can use machine learning algorithms to analyze test results and identify patterns in user behavior. By using these advanced techniques, businesses can gain a deeper understanding of their target audience and create more effective marketing campaigns. It’s also important to consider the concept of statistical significance when conducting split tests, to ensure that the results are meaningful and not due to random chance. Furthermore, businesses can use split testing to test different page layouts, subject lines, and email content to boost conversions and improve user engagement.

Tools for Split Testing

Recommended tools for conducting split testing on web pages include Google Optimize, Unbounce, and Optimizely. These platforms are user-friendly and provide comprehensive features to design and execute experiments, including different segments of a marketing campaign. For example, Google Optimize enables the creation of a landing page variant specifically for targeted split tests.

Several platforms also offer automated features that enable follow-up actions based on the results of these tests, thereby improving overall testing tactics. Additional analytics tools such as Google Analytics 4 and Hotjar can enhance split testing efforts by offering insights into user behavior.

Employing these various tools effectively can lead to significant enhancements in users’ online experiences and boost their propensity to complete desired actions on your site.

Real-World Examples of Split Testing Success

Successful split testing can lead to increased return on investment by improving key metrics like sales and sign-ups. For example, Going achieved a 104% increase in trial starts by changing their call-to-action from ‘Sign up for free’ to ‘Trial for free’. Campaign Monitor saw a 31.4% boost in conversions through conversion rate optimization by dynamically matching landing page text to user search results, and testing different page elements.

First Midwest Bank’s creative A/B tests resulted in a 195% increase in overall conversions by customizing landing pages for local demographics to boost conversions. TechInsurance improved their PPC conversion rate by 73% with a dedicated landing page tailored to their ad audience.

These examples demonstrate the significant impact split testing can have on business metrics and specific metrics inspire readers to implement similar strategies.

Split Testing for E-commerce

Split testing is crucial for e-commerce businesses, as it allows them to optimize their product pages, landing pages, and marketing campaigns to increase conversions and average order value. By using split testing tools, e-commerce businesses can test different product page layouts, images, and descriptions to determine which version performs best. Additionally, businesses can use split testing to optimize their checkout process, test different payment options, and improve user experience. It’s also important to consider the concept of bounce rate and how it can impact conversion rates. By using split testing, e-commerce businesses can identify areas for improvement, collect relevant data, and make data-driven decisions to drive business growth.

Future of Split Testing

The future of split testing is exciting, with the increasing use of machine learning and artificial intelligence to analyze test results and predict user behavior. Additionally, the use of multivariate testing and advanced statistical analysis will become more prevalent, allowing businesses to gain a deeper understanding of their target audience and create more effective marketing campaigns. Furthermore, the integration of split testing with other digital marketing tools, such as Google Analytics, will become more seamless, allowing businesses to make data-driven decisions and optimize their marketing campaigns in real-time. As the digital landscape continues to evolve, split testing will remain an essential tool for businesses to optimize their website, improve user experience, and drive business growth. By staying up-to-date with the latest split testing techniques and tools, businesses can stay ahead of the competition and achieve their marketing goals.

Summary

In summary, mastering split testing is essential for any digital marketer looking to optimize their web pages and marketing campaigns. By understanding the benefits, techniques, and best practices, you can effectively run split tests and make data-driven decisions that enhance user engagement and increase conversions, ensuring your tests reach statistical significance.

Remember, the key to successful split testing lies in continuous experimentation and learning from your results. Start small, stay focused, and let the data guide your decisions. With the right approach and tools, you can transform your digital marketing efforts and achieve remarkable results.

Frequently Asked Questions

What is split testing?

Split testing, or A/B testing, involves comparing two versions of a webpage to identify which one yields better results. This method can also be used to test subject lines in email marketing.

This method helps optimize user experience and improve conversion rates.

Why is split testing important?

Split testing plays a vital role in improving both website efficiency and the user experience by identifying which changes have the most significant effect.

By adopting split testing, one can achieve higher conversion rates and an enhanced overall performance of the website.

What are the key benefits of split testing?

The key benefits of split testing are increased conversions, optimized future campaigns, and valuable insights into audience segments. By implementing split testing, you can significantly enhance your marketing effectiveness.

How do I identify the objective for a split test?

To set a goal for a split test, scrutinize the data from your website to determine crucial metrics, with an emphasis on either pages that receive substantial traffic or those exhibiting poor conversion rates.

By doing so, you concentrate your testing activities on areas where they can make the most significant difference in performance.

What are some common mistakes to avoid in split testing?

To ensure effective split testing, avoid the common pitfalls of not having a defined hypothesis, running tests with inadequate user traffic, testing excessive variables, and concluding tests too early.

Staying focused and methodical will enhance your testing outcomes.